In today’s data-driven world, organizations are handling massive amounts of information, and petabyte-scale storage is becoming more common. A petabyte, equivalent to 1,024 terabytes or approximately one quadrillion bytes, represents a significant leap in data storage capacity. To put this into perspective, a single petabyte could store 13.3 years of high-definition video or roughly 500 billion pages of printed text. As data volumes continue to grow, understanding petabyte storage is essential for managing these vast datasets effectively.

The Importance of Petabyte-Scale Storage

As data continues to increase at exponential rates, particularly in industries like healthcare, finance, and research, managing large volumes of data requires more than just basic storage solutions. A petabyte of data can hold an overwhelming amount of information, and its scale necessitates advanced approaches to storage, organization, and governance.

A petabyte-scale data repository is equivalent to around 100 copies of the entire U.S. Library of Congress, which is a staggering volume to manage. For large organizations, this size of data presents numerous challenges and requires robust systems and infrastructure to store, manage, and secure it efficiently.

Technical Challenges and Solutions for Managing Petabyte-Scale Data

Managing data at the petabyte level requires special storage architectures and technologies. Traditional storage methods are not sufficient, so enterprises often turn to advanced solutions like Storage Area Networks (SAN) or Network-Attached Storage (NAS), which provide better scalability and fault tolerance.

Data compression techniques also play a significant role in optimizing storage space and improving cost efficiency. Methods like deduplication and lossless compression ensure that data is stored efficiently without compromising its quality. Furthermore, RAID configurations, such as RAID 6 or RAID 10, offer redundancy and fault tolerance, ensuring data remains accessible even if individual drives fail.

Organizing and Structuring Petabyte-Scale Data

One of the most complex aspects of managing petabyte-scale data is organizing it efficiently. Big Data frameworks are essential in processing these large datasets. These frameworks distribute data across multiple machines, enabling parallel processing that accelerates data analysis.

Distributed file systems, such as the Hadoop Distributed File System (HDFS), also play a crucial role in petabyte-scale data management. These systems store data across multiple nodes, ensuring that the data remains accessible even if one or more components fail. For businesses dealing with unstructured data, NoSQL databases like Cassandra and MongoDB are commonly used, as they are flexible and scalable enough to manage vast amounts of data.

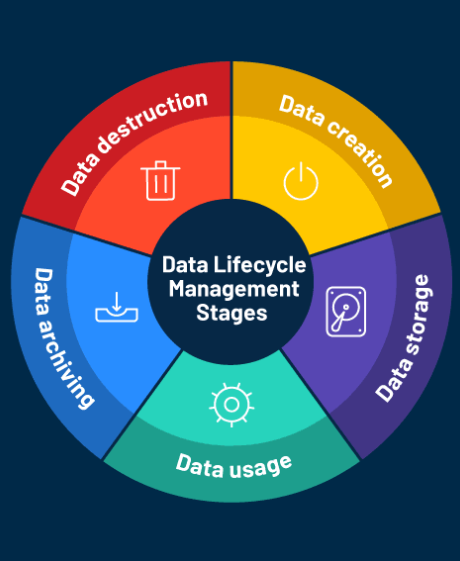

Data Governance at Petabyte Scale

As data grows, maintaining its accuracy, consistency, and security becomes increasingly difficult. Proper data governance ensures that data is not only accessible but also protected and compliant with industry regulations. Metadata management is critical for organizing and retrieving data efficiently. Proper tagging of data allows teams to find and use it as needed, enhancing productivity.

Data lineage—tracing the flow of data from its origin to its final use—is also important. Understanding where data comes from and how it’s been modified helps organizations maintain data integrity and meet compliance standards. Compliance regulations like GDPR and HIPAA make it essential to protect sensitive data from breaches and unauthorized access, which becomes even more complicated at the petabyte scale.

Real-World Applications of Petabyte-Scale Data

Petabyte-scale data is already being applied across various industries. For example, in healthcare, vast datasets are used for genetic research, personalized medicine, and patient care analysis. In finance, petabyte-scale data analytics is key for fraud detection and risk management. Scientific research also benefits from the large-scale storage of data to model and simulate complex phenomena, like climate change.

One notable example comes from a major research institution that used petabyte-scale data to model climate scenarios. Their findings led to impactful insights and policy recommendations, showing the transformative power of managing large data volumes effectively.

Looking Ahead: The Future of Data Storage and Management

Emerging technologies are set to revolutionize data storage and management. Innovations like DNA data storage offer a compact and durable way to store vast amounts of information. Meanwhile, quantum computing promises to accelerate data processing and analysis, potentially allowing organizations to process petabytes of data in a fraction of the time it currently takes.

As the amount of data grows, organizations will face new challenges in managing these massive datasets. However, these challenges also present opportunities to innovate and improve business operations through better data management and advanced technologies.

Conclusion

Understanding and managing petabyte-scale data is no longer optional for large organizations—it’s a critical part of staying competitive in the modern world. Proper data governance and advanced storage solutions are essential to ensure that massive datasets remain secure, compliant, and accessible. By investing in the right strategies and technologies, businesses can harness the potential of their data to drive innovation and make informed decisions.